Prediction and Entropy of Languages

Prediction and Entropy of Languages

Shannon's information entropy [1] is defined by , where is the probability of , and the sum is over the elements of . Shannon's entropy is a measure of uncertainty.

H(Q)=-∑P(n)P(n)

log

2

P(n)

n

Q

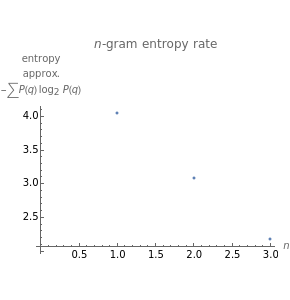

An application is the redundancy in a language relating to the frequencies of letter -grams (letters, pairs of letters, triplets, etc.). This Demonstration shows the frequency of -grams calculated from the United Nations' Universal Declaration of Human Rights in 20 languages and illustrates the entropy rate calculated from these -gram frequency distributions. The entropy of a language is an estimation of the probabilistic information content of each letter in that language and so is also a measure of its predictability and redundancy.

n

n

n