Maximum Entropy Probability Density Functions

Maximum Entropy Probability Density Functions

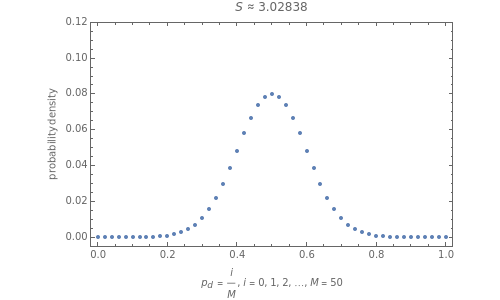

The principle of maximum entropy can be used to find the probability distribution, subject to a specified constraint, that is maximally noncommittal regarding missing information about the distribution. In this Demonstration the principle of maximum entropy is used to find the probability density function of discrete random variables defined on the interval subject to user-specified constraints regarding the mean and variance . The resulting probability distribution is referred to as an distribution [1]. The mean of the distribution associated with a proposition is the probability of that proposition, and the variance of the distribution is a measure of the amount of confidence associated with predicting the probability of the proposition. When only the mean is specified, the entropy of the distribution is maximal when the specified mean probability is When both mean and variance are specified, the entropy of the distribution decreases as the specified variance decreases.

(0,1)

μ

2

σ

A

p

A

p

A

A

p

S

A

p

μ=0.5.

S

A

p