Web Scraping and Sentiment Analysis

13

|Import a PDF File from the Web

Introduction

Previous sections have analyzed text through sentiment analysis and a search for keywords. This section will apply those concepts to an external PDF file, which is a much larger document. This analysis will also import a more extensive list of keywords to compare with the PDF document to identify themes. So several datasets will be imported, compared to manually typing in text as we did in one of the first chapters of the course.

Importing a PDF File and Spreadsheet

Importing a PDF File and Spreadsheet

The Import command has been used in several chapters of this course, and we’ll use it again below to import a PDF in plain text format and store that block of text as a string with the symbol name fullText. Notice there is a second argument to Import that returns the block of text as plain text with no formatting. Import can also return HTML code, just images on the website or just the hyperlinks on a website. This is a large, multipage document, so a semicolon is used to suppress the output. The data is stored, just not printed to the screen:

In[]:=

fullText=Import["https://corporate.mcdonalds.com/content/dam/gwscorp/nfl/investor-relations-content/quarterly-results/Exhibit%2099.1.pdf","Plaintext"];

The Import command can also be used to bring in spreadsheet data and store it as a list. But in our case, instead of somehow sending everyone working through this case a CSV or XLS file, we’ll use the Wolfram Data Repository. Wolfram Data Drop allows anyone to import this exact same data in their respective copies of Mathematica. This shared databin has a specific name, and evaluating it displays a summary:

In[]:=

Databin["1oOD19vSl"]

Out[]=

Databin

Instead of the summary, we want to extract all the elements in the stored list, and Normal returns the dictionary of positive words as a list. This list can then be stored with the symbol posWords so we can compare it against the large PDF document in one of the next steps of the analysis:

In[]:=

posWords=Normal[Databin["1oOD19vSl"]]

Out[]=

{strong,strength,growth,positive,increase,increased,grow,grew,surpassing,milestone,milestones,achievement,momentum,convenience,engagement}

Negative words are stored in a different databin. They can also be stored with a symbol negWords for analysis later:

In[]:=

negWords=Normal[Databin["1oOD0xz8M"]]

Out[]=

{weak,weakness,decline,poor,stagnant,decrease,shrink,shrinking,almost,next quarter,updated,changes,exclusion,exclusions,temporarily,layoff,cut,revised,inflation,pandemic,covid}

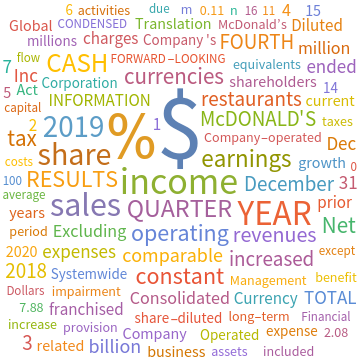

With a larger document, it is often useful to create a word cloud or other type of summary to confirm the webpage was imported as intended. This word cloud seems to represent the financial document fairly well:

In[]:=

WordCloud[fullText]

Out[]=

There are also several functions to query the amount of data that is stored in any particular symbol. StringLength tells us there are over 16,000 characters (letters, spaces or punctuation) in the imported block of text:

In[]:=

StringLength[fullText]

Out[]=

16314

Summary

Summary

The concepts for this example are similar to earlier examples but expand to use several data sources that are too large for human inspection. This is the value of writing programs in Wolfram Language that can be used repeatedly for different PDF documents to compare them. Without a program, there would be no practical way to do this type of close inspection.