You are using a browser not supported by the Wolfram Cloud

Supported browsers include recent versions of Chrome, Edge, Firefox and Safari.

I understand and wish to continue anyway »

| |||||

Unable to save data. |

| |||||

Unable to save data. |

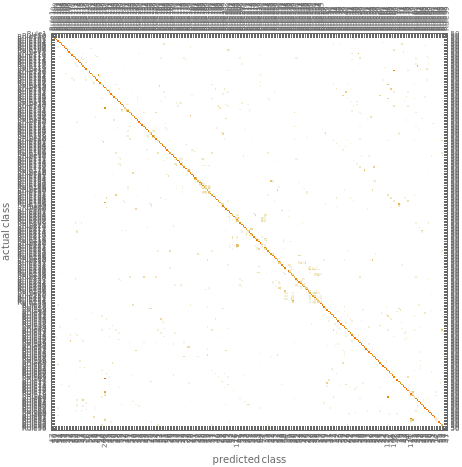

0.714902 |

|

0.0627451 |

|

0.0908235 |

|

You are using a browser not supported by the Wolfram Cloud

Supported browsers include recent versions of Chrome, Edge, Firefox and Safari.

I understand and wish to continue anyway »